Without a network, a computer has little if any use in daily life. Networking enables the internet and the SaaS-based applications that come with it.

While necessary, networking has always been a tricky problem to solve, especially true for building modern, distributed applications. Cloud native networking involves drawing bridges between large numbers of components spread across broad surfaces. Managing the flow of traffic across these bridges is even more complicated. Rate limiting is a vital part of doing so.

What is rate limiting?

Rate limiting is best understood with the analogy of the leaky bucket. Imagine you are pouring water into a bucket that has holes at the bottom. The bucket can hold a certain amount of water, and it loses water at a pace determined by the number of holes. It will eventually overflow if you pour water faster than the bucket leaks.

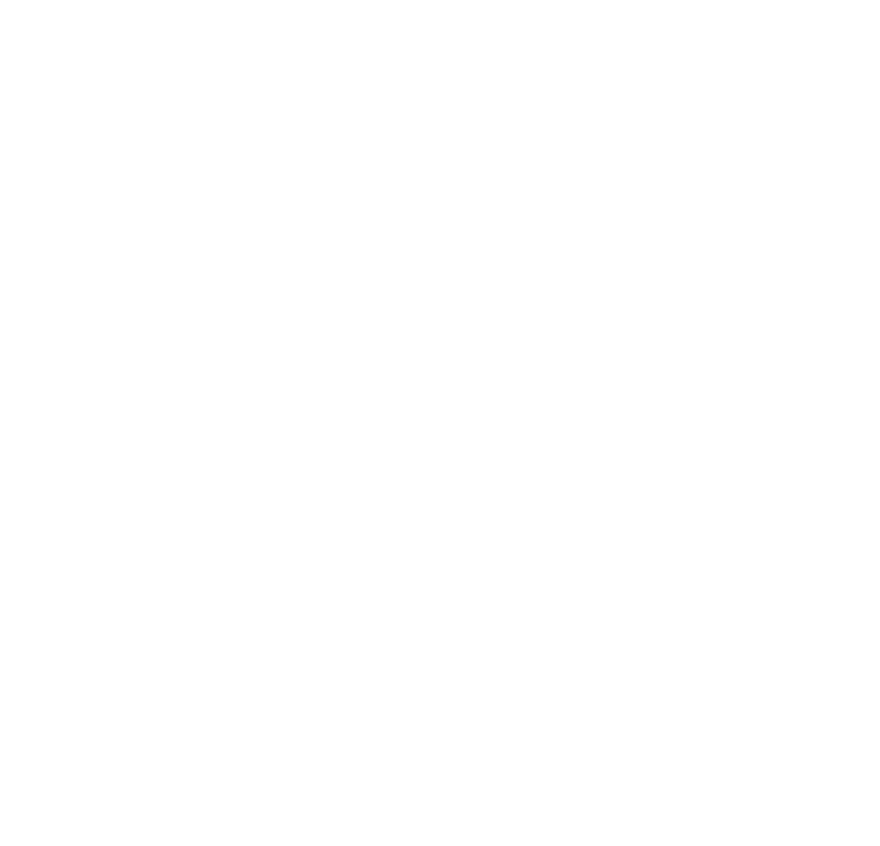

At its simplest, the internet transmits traffic to your reverse proxy, which filters the traffic to the right Service through rate limiting middleware. Your infrastructure can only bear a particular traffic load before its servers shut down and your website with it. Rate limiting is matching the flow of traffic to your infrastructure’s capacity.

There are two parameters to take into account. The rate is the number of requests received by the proxy per unit of time, and the burst is the absolute number of requests held by the middleware. The rate is the speed at which the bucket leaks, and the burst is how much water the bucket can hold. You, the user, adjust the rate and burst as needed to maintain a healthy traffic flow.

What happens if your rate limit is exceeded?

If your rate limit is exceeded and the bucket becomes full, your proxy will bounce incoming requests back to their source. It will send a specific code relaying the message to stop sending requests. The code is typically 429, aka HTTP StatusTooManyRequests. The infrastructure is at maximum capacity and will not be taking in any more traffic until the bucket leaks.

How is rate limiting a form of traffic shaping?

Rate limiting is a form of traffic shaping — it lets you control the flow and distribution of traffic from the internet so your infrastructure never becomes overloaded and fails. Without traffic shaping, traffic would line up in a queue to enter your proxy, causing congestion.

Rate limiting also makes sure your proxy doesn’t favor certain sources of traffic from the internet over others. Within your rate limiting middleware, each source receives its own rate limiter. You choose the configuration, defining the burst and requests per second for your rate limiters. All rate limiters in a given instance of the middleware match that configuration. If you want to rate limit two or more sources differently (with different configuration parameters), then you have to use two or more rate limit middleware instances.

For example, you can configure your rate limiting middleware to allow each source five requests per second with a burst of ten requests. If one source reaches that capacity before others, it must wait until the bucket is less full before sending more requests. If your bucket is full or almost full, you can increase your rate limit temporarily to accommodate a busy source until the bucket is no longer overflowing.

How can you define sources?

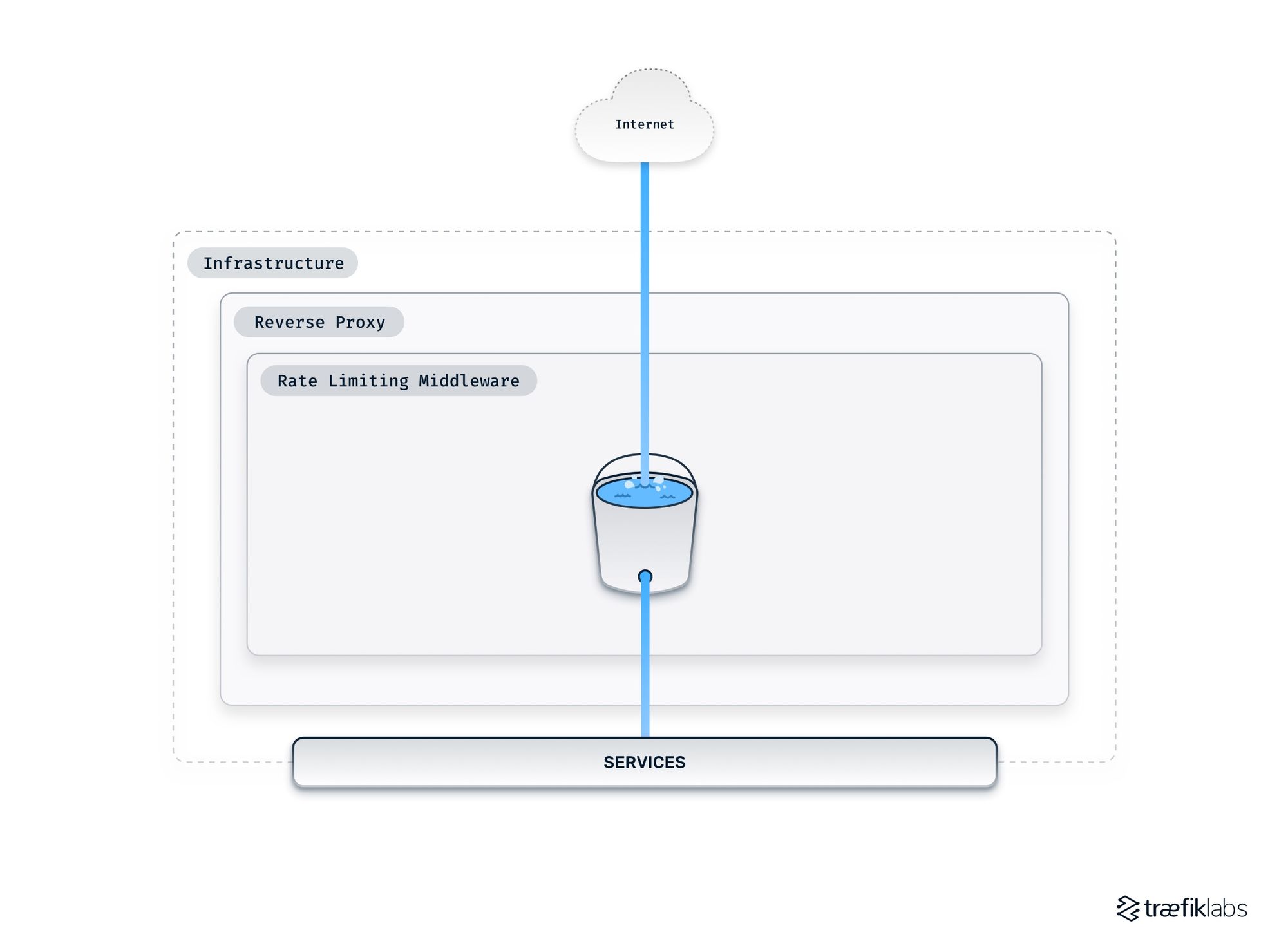

Your proxies will define each remote IP address as a distinct source by default. However, IP addresses can be deceptive. If multiple individuals are sending packets from, for example, Cloudflare, and using their own proxy, they will share the same IP. Your proxy will view them all as being the same source.

Given that each rate limiting middleware has a distinct rate limiter for each source, how you define sources is very important. Each IP address is considered a distinct source by default. However, you might want to aggregate all IPs belonging to a given organization (behind their own proxy) to consider them as one source.

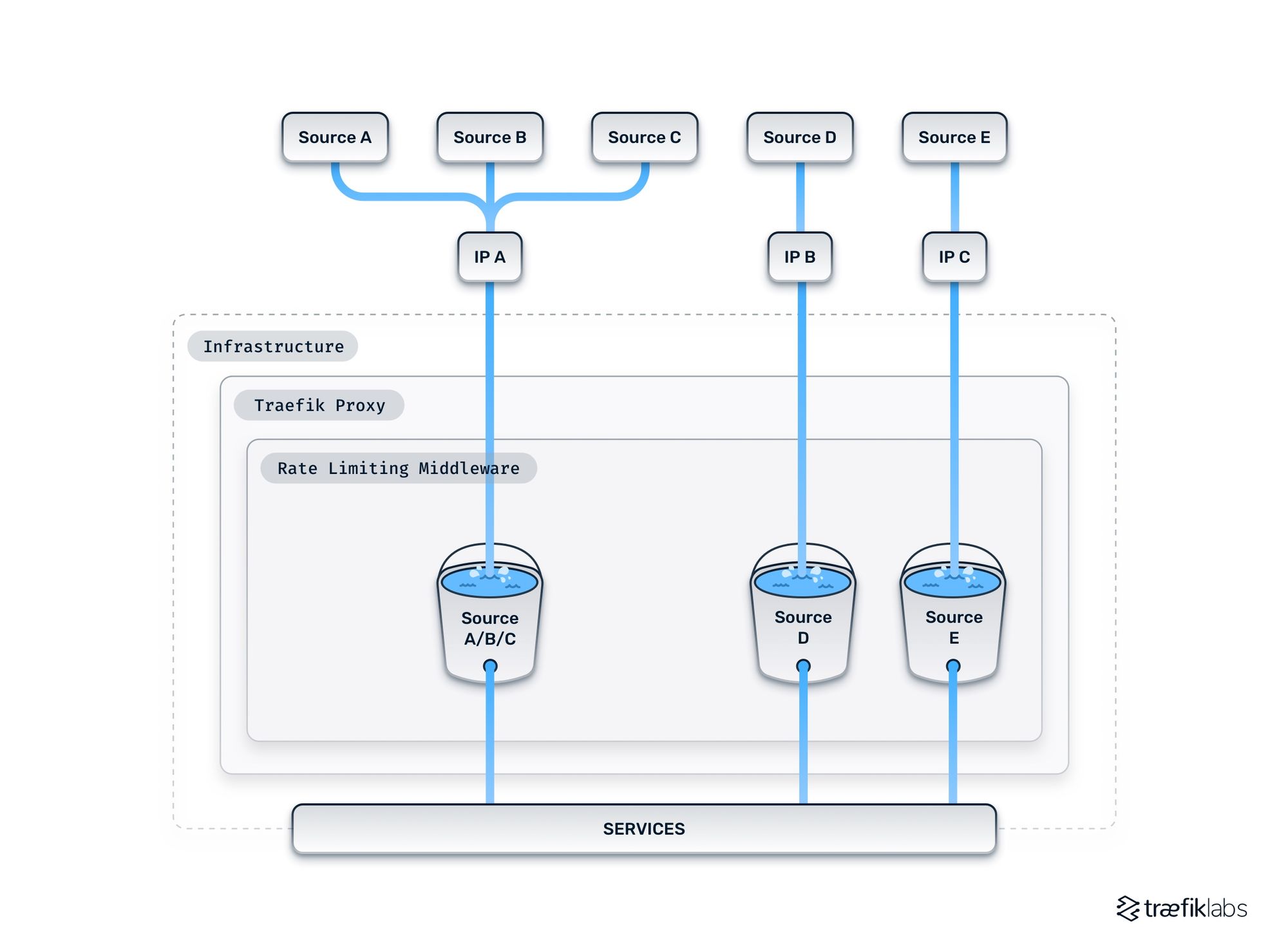

A configuration option in Traefik Proxy called source criterion allows you to define what should be considered a source. This configuration takes effect in what we call a source extractor, which acts as part of the rate limiting middleware and decides which requests should be aggregated together as coming from the same source. Doing so gives you the ability to distribute rate limits fairly between all sources.

The source criterion feature also gives you the option of customizing the distribution of traffic by prioritizing certain users over others. You can choose if you want to group specific sources together as one while leaving others as independent sources.

How does rate limiting help security?

Reverse proxies can be configured to improve application security, and rate limiting is one way to do so. By allowing you to control the traffic flow, rate limiting is crucial in ensuring your application remains secure and resilient. Without rate limiting, malicious parties can exhaust your infrastructure’s resources. It helps prevent individual users from flooding your system by overwhelming your proxies with requests for information.

In brute force attacks, hackers use automation to transmit infinite numbers of requests to proxies, hoping that one will be accepted. In Denial of Service attacks, hackers flood a system with requests to shut down an application entirely. These attacks may also be caused accidentally by bugs sending repeated failed requests. Rate limiting is a potent safeguard against both kinds of attack.

What is API rate limiting?

Rate limiting helps keep your infrastructure operational and receptive to different sources of traffic. You can also apply rate limiting to different parts of your APIs. Even if your infrastructure can, at least in theory, bear the load of the traffic you receive, exposing your APIs to the internet still costs you money. The cost increases with the amount of traffic your APIs receive (because you need more servers, more bandwidth, etc.), which is why you might want to control these costs and protect your APIs with rate limiting.

API rate limiting also offers businesses several advantages. For example, you can give users that have logged into their accounts and are authenticated better quotas with access to more features. Similarly, you can offer customers different tiers of Service each with their own quotas, so paying customers have access to more features.

Rate limiting in different proxies

Today, every modern proxy has rate limiting. It is a core functionality that users expect and need. However, not all proxies implement rate limiting the same way. There are a few ways the configuration will differ.

Rate limiting middleware can be coded in different languages, relying on what their libraries provide. Traefik Proxy is written in Go, and its rate limiting middleware relies on a library maintained by the Go project, a solid and well-understood algorithm. It is trusted and less likely to have memory-related bugs. Other reverse proxies are written in other languages. For example, NGINX is written in C.

What is distributed rate limiting?

Some organizations have complicated networks and therefore require networking solutions with advanced rate limiting. Traefik Enterprise supports distributed rate limiting. It's a middleware just like Traefik Proxy's rate limiting middleware, but it ensures that requests are limited over time throughout your cluster and not just on an individual proxy. It applies a global rate limit across traffic in all proxies, coordinating networking.

Summary

Rate limiting is a key part of any modern, cloud native networking strategy. It helps ensure applications are secure and resilient and also ensures users can be treated fairly.

Understanding rate limiting middleware is a fundamental part of any healthy infrastructure network. It is important and very easy to learn how to rate limit.

Traefik Proxy makes rate limiting very simple. Check out the Traefik Proxy documentation for more information on rate limiting.

For advanced rate limiting, an advanced networking solution like Traefik Enterprise helps. Combining API management, ingress control, and service mesh, Traefik Enterprise offers advanced rate limiting middleware to ease networking complexity. Check out the Traefik Enterprise documentation to learn more.