Traefik Proxy 2.x and Kubernetes 101

Hello and welcome!

In this post, I will walk you through the process of working with Traefik Proxy 2.x on Kubernetes and I’ll discuss common application deployment scenarios along with the involved Traefik Proxy 2.x concepts.

Let’s dig in.

Prerequisites

Before we jump into the matter at hand, let’s have a look at a few prerequisites. If you want to follow along with this tutorial, you'll need to have a few things set up first:

- A working Kubernetes cluster - The Traefik Labs team often uses k3d for this purpose. It creates a local Kubernetes cluster using the k3s Kubernetes solution, packaged with Traefik Proxy version 2.4.8. You also need to enable port mappings for 80 and 443 ports. These ports allow us to handle HTTP and HTTPS requests when using Traefik:

$ k3d cluster create dash -p "80:80@loadbalancer" -p "443:443@loadbalancer"

- The

kubectlcommand-line tool - Make sure you configure it to point to your cluster. If you created your cluster using K3d and the instructions above, this will already be done for you. - Add a Kubernetes Namespace named

dev- This is used for deploying your applications.

$ kubectl create namespace dev

Traefik Proxy 2.x

To tell you a bit more about how Traefik works, Traefik Proxy 2.x provides seamless integration with the Kubernetes Ingress object by using annotations. Make sure these annotations are configured as part of Kubernetes Ingress spec.

k3s has the concept of add-ons that can be used to customize the cluster. Traefik Proxy is installed as an add-on in the kube-system namespace:

$ kubectl describe AddOn traefik -n kube-system

k3s configures Traefik Proxy to handle incoming HTTP and HTTPS requests and it also enables the API and the dashboard.

Details (optional)

Traefik Proxy has the concept of EntryPoints. These entrypoints configure network ports on which Traefik Proxy receives incoming requests. Traefik Helm chart deployment creates the following entrypoints:

- web: It is used for all HTTP requests. The Kubernetes LoadBalancer service maps port 80 to the web EntryPoint.

websecure: It is used for all HTTPS requests. The Kubernetes LoadBalancer service maps port 443 to thewebsecureentrypoint.traefik: Kubernetes uses the Traefik Proxy entrypoint for pod liveliness check. The Traefik dashboard and API are available on the Traefikentrypoint.

Applications are configured either on the web or the websecure entrypoints.

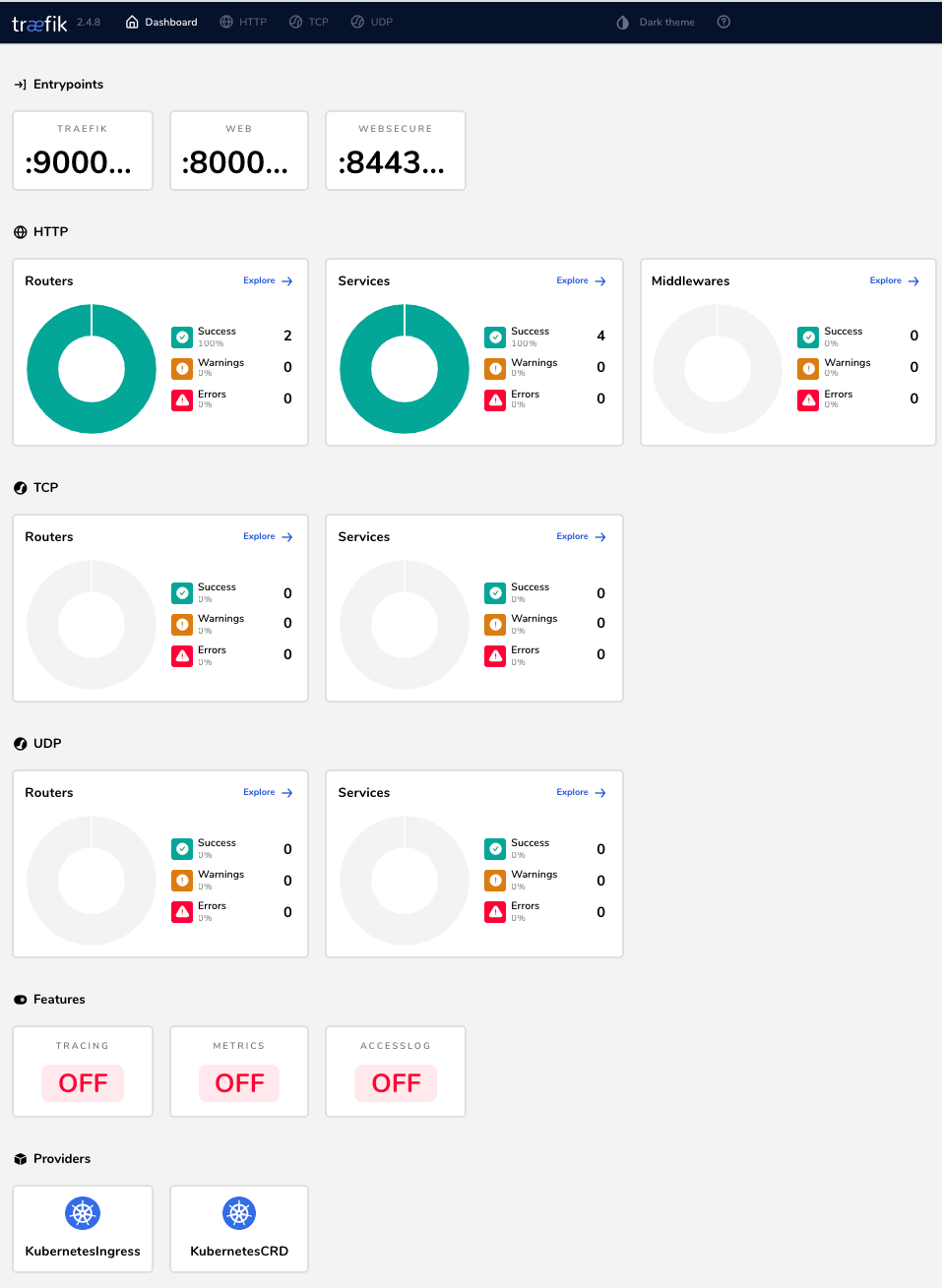

Traefik Proxy supports integration with Kubernetes by using KubernetesIngress, KubernetesCRD, and Gateway API providers. The KubernetesIngress provider works with Kubernetes-provided Ingress objects, which is described in this post. On the other hand, Traefik Proxy also provides Kubernetes Custom Resource Definition for its components, supported by the KubernetesCRD provider. Moreover, there are Gateway resources to integrate using the Alpha version of Kubernetes Gateway API.

The Helm chart enables KubernetesIngress and KubernetesCRD providers.

Traefik Proxy dashboard

Let's get access to the Traefik Proxy dashboard. The dashboard shows changes as you execute various deployment scenarios. Traefik API is not accessible outside the Kubernetes cluster and you need to perform port-forwarding to the Traefik pod deployed in kube-system namespace by using the following command:

$ kubectl port-forward -n kube-system "$(kubectl get pods -n kube-system| grep '^traefik-' | awk '{print $1}')" 9000:9000

Forwarding from 127.0.0.1:9000 -> 9000

The Traefik dashboard is now accessible at http://localhost:9000/dashboard/.

Deploy application

We need to deploy our whoami application for the host myapp.127.0.0.1.nip.io. The complete process involves the following three steps :

- Create a

whoamiKubernetes Deployment. It will deploy thewhoamiapplication pod in thedevnamespace.

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami

namespace: dev

labels:

app: whoami

spec:

replicas: 1

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- image: docker.io/traefik/whoami:v1.6.1

name: whoami

ports:

- containerPort: 80- Create a

whoamiKubernetes Service for the deployed application pod.

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: dev

spec:

ports:

- name: whoami

port: 80

targetPort: 80

selector:

app: whoami

- Create a

whoamiKubernetes Ingress. The Ingress configuration listens for all incoming requests onexample.com. It will delegate the request to the deployedwhoamiKubernetes Service.

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: whoami

namespace: dev

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: web

spec:

rules:

- host: myapp.127.0.0.1.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80

Quick explanation

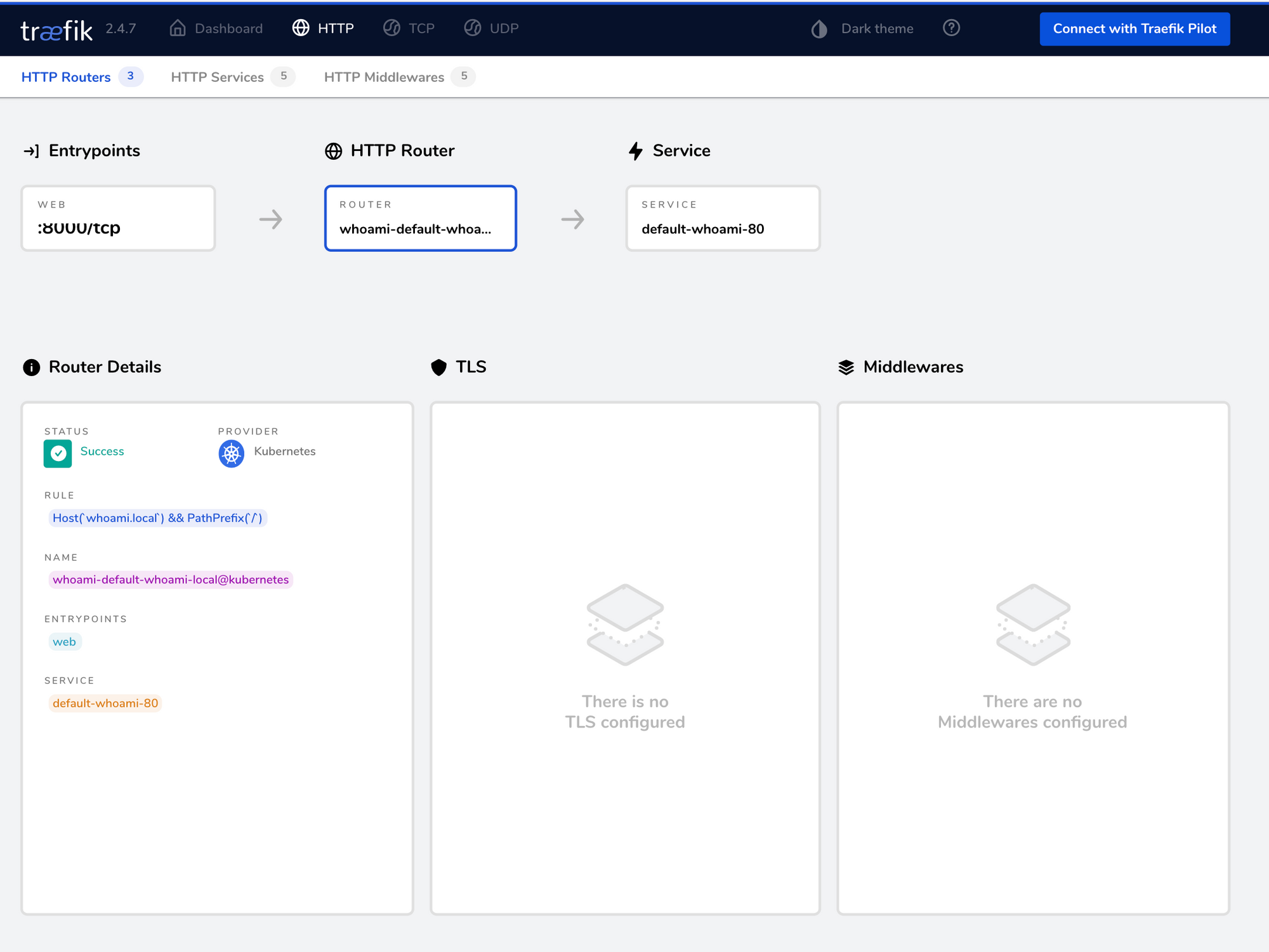

The above configuration listens for HTTP requests, arriving on the web entrypoint. It evaluates the request host header for the value myapp.127.0.0.1.nip.io. A successful request is then forwarded to thewhoami application container.

You can find the newly created router, with the associated service, under the HTTP routers section of the Traefik dashboard.

Details (optional)

Traefik Proxy 2.x has the concept of routers. A router is responsible for handling incoming requests, as per the specified criteria. A matching request is then forwarded to the application's location in the Kubernetes cluster. The above configuration performs a hostname check. Traefik Proxy supports all Kubernetes Ingress rules to build the required criteria

The whoami Kubernetes Ingress object is used to create a router. It listens for requests arriving on one or more entrypoints specified by the traefik.ingress.kubernetes.io/router.entrypoints annotation. The Kubernetes Ingress Spec specifies the request matching rules as well as the .backed Kubernetes Service. These attributes are used to configure the rules and services details of the created router.

Configure authentication

Without any authentication, the application is exposed and anyone can access it. You can secure the application by configuring an authentication mechanism. The configuration presented below adds basic authentication to the whoami application. The configuration requires a combination of username and password as Kubernetes Secrets. The password must be encrypted using a hashing method like MD5, SHA, or CRYPT. It is best to generate the encoded combination using the htpasswd command.

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: test-auth

namespace: dev

spec:

basicAuth:

secret: userssecret

---

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: "whoami"

namespace: dev

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: web

traefik.ingress.kubernetes.io/router.middlewares: dev-test-auth@kubernetescrd

spec:

rules:

- host: myapp.127.0.0.1.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80

Quick explanation

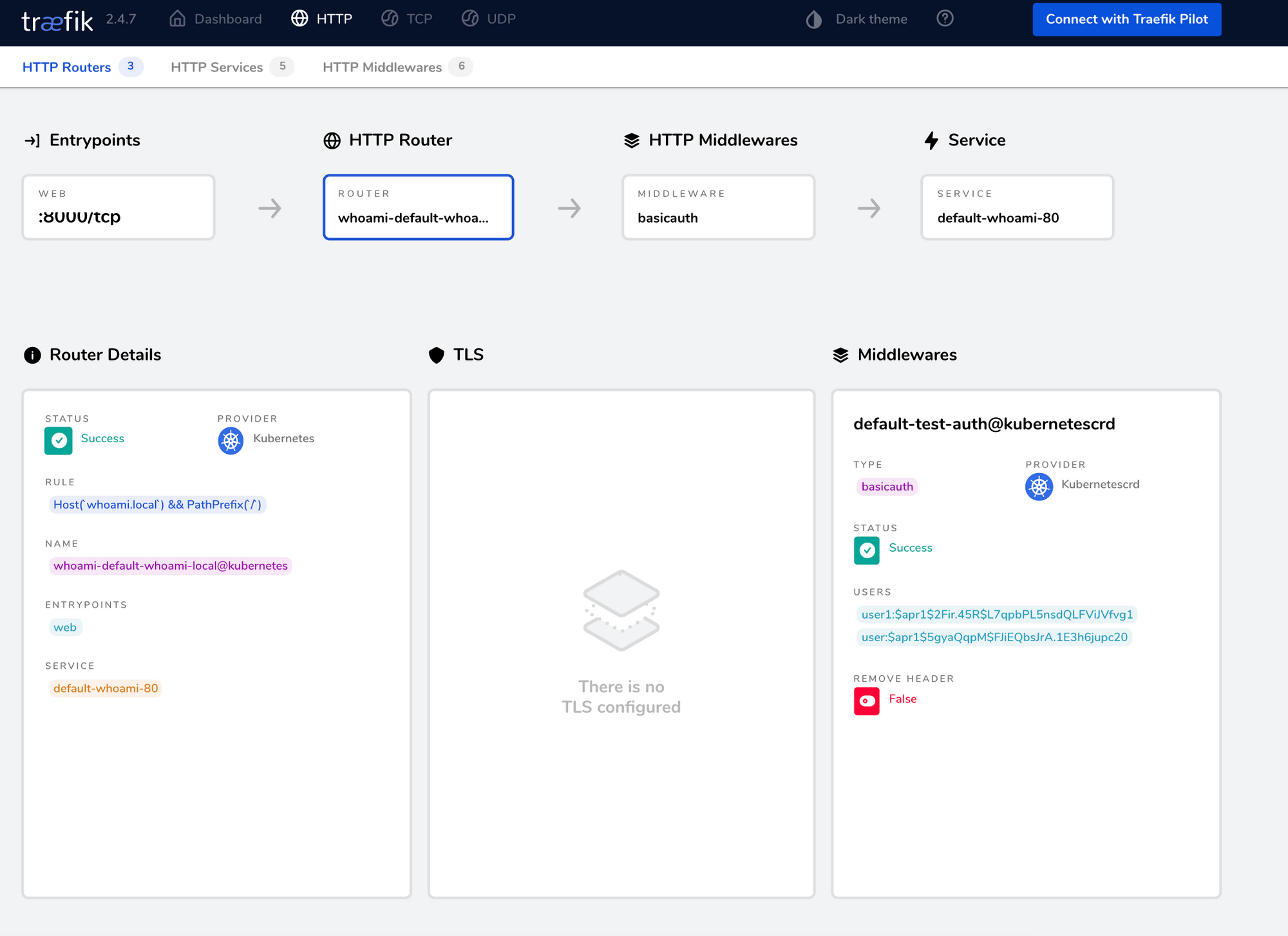

The configuration above listens for HTTP requests, arriving on the web entrypoint. It evaluates the request host header for the value myapp.127.0.0.1.nip.io and authenticates the request using default-test-auth Middleware, enabled by traefik.ingress.kubernetes.io/router.middlewares annotation, before forwarding it to the whoami Kubernetes Service.

The Traefik dashboard will show the updated router with the authentication middleware. Middlewares in Traefik Proxy belong to their specified namespace and must be referred to using the convention <namespace>-<middleware-name>@<provider>.

The Traefik dashboard will show the updated router with the authentication middleware. Middlewares in Traefik Proxy belong to their specified namespace and must be referred to using the convention <namespace>-<middleware-name>@<provider>.

Details (optional)

Traefik Proxy provides Middleware for various request pre and post-processing needs like authentication, rate limiting, compression, etc. Multiple preprocessors can be executed in a chain before forwarding a request to the underlying endpoint. Traefik Proxy-provided middleware is created by using the Middleware KubernetesCRD.

The Middleware KubernetesCRD definition has attributes for the different parameters of a Middleware resource and you can reuse the created Middleware resource across multiple Ingress objects and multiple times on the same Ingress object.

TLS termination

Traefik Proxy provides TLS support using minimal parameters. It takes four parameters to configure automatic certificate generation and additional two annotations for using these certificates on a Traefik Proxy-configured domain.

Enable ACME

Traefik Proxy facilitates the automated generation of TLS certificates by using Lets-Encrypt. The Lets-Encrypt service is enabled using Traefik static configuration.

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

additionalArguments:

- "--log.level=DEBUG"

- "[email protected]"

- "--certificatesresolvers.le.acme.storage=/data/acme.json"

- "--certificatesresolvers.le.acme.tlschallenge=true"

- "--certificatesresolvers.le.acme.caServer=https://acme-staging-v02.api.letsencrypt.org/directory"

You must update the Traefik Proxy deployment using the above HelmCRD :

$ kubectl apply -f traefik-update.yaml

Quick explanation

Traefik Proxy invokes Lets-Encrypt service using parameters specified by certificatesresolver attributes to generate and renew TLS certificates. It downloads these generated certificates and associates them on a configured router.

Detail (optional)

Traefik Proxy certificatesresolvers is a static configuration, which must be provided at startup. The static configuration is responsible for configuring the Kubernetes Ingress provider. The provider then listens for dynamic configuration using the annotations as outlined in the docs.

Make sure to specify certificatesresolvers for the various domains you own. Each configured certificatesresolvers is named—this is accomplished by the second value of the parameter name:

- In the configuration above,

leis the name of ourcertificatesresolvers. Theleis a type ofacmewhich is denoted by the third value of the parameter name. - The last value of the parameter name points to ACME-specific attributes.

emailis used to determine the Lets-Encrypt account.storagepoints to the location of certificate storage on disk. Lets-Encrypt uses various challenges for certificate generation and, in our example, we are using thetlschallenge.caServerprovides the location of the Lets-Encrypt server.

Please note that it is recommended to use the acme-staging server while working on staging or debugging environments. This is often required to bypass the limits enforced by the acme-production server.

Use websecure

Next, let’s enable tls configuration on the Kubernetes Ingress. The configuration will work on the HTTPS protocol which is supported by the websecure entrypoint.

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: "whoami"

namespace: dev

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: websecure

traefik.ingress.kubernetes.io/router.tls.certresolver: le

traefik.ingress.kubernetes.io/router.middlewares: dev-test-auth@kubernetescrd

spec:

rules:

- host: myapp.127.0.0.1.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80

Quick explanation

The Ingress object creates a router for requests on the websecure port. The router is associated with the le certificateresolver configuration. The Traefik dashboard shows the updated router with TLS details and websecure entrypoint.

Details (optional)

Previously, we had discussed that the 443 port is mapped to the websecure entrypoint. Thus you must associate the websecure entrypoint for whoami routers. When Traefik Proxy router receives a request on websecure entrypoint, it asks the le resolver for a TLS certificate. The resolver either provides a stored certificate or will generate a new one based on the provided values.

Enable HTTPS redirect

Now that TLS is working fine, let’s redirect all HTTP requests to HTTPS. This makes sure if a user accesses the HTTP location, they are redirected to the correct URL instead of a 404 error. We can configure redirectScheme Middleware that allows us to configure the scheme and port shown below.

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: https-redirect-scheme

namespace: dev

spec:

redirectScheme:

scheme: https

port: "443"

---

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: "webredirect"

namespace: dev

annotations:

kubernetes.io/ingress.class: traefik

traefik.ingress.kubernetes.io/router.entrypoints: web

traefik.ingress.kubernetes.io/router.middlewares: dev-https-redirect-scheme@kubernetescrd

spec:

rules:

- host: myapp.127.0.0.1.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: whoami

port:

number: 80

Quick explanation

The Ingress object listens for requests on the web port. Traefik Proxy then performs a redirect to the location configured by the https-redirect-scheme Middleware.

Securing the dashboard

So far, we discussed all aspects of configuring an application in Traefik Proxy and we looked at Router, Services, Middleware, and TLS termination. Now, you can leverage these components to secure the dashboard for your Kubernetes cluster.

apiVersion: v1

kind: Service

metadata:

name: traefik-dashboard

namespace: kube-system

labels:

app.kubernetes.io/instance: traefik

app.kubernetes.io/name: traefik-dashboard

spec:

type: ClusterIP

ports:

- name: traefik

port: 9000

targetPort: traefik

protocol: TCP

selector:

app.kubernetes.io/instance: traefik

app.kubernetes.io/name: traefik

---

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: "traefik-dashboard"

namespace: kube-system

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: websecure

traefik.ingress.kubernetes.io/router.tls: "true"

traefik.ingress.kubernetes.io/router.middlewares: dev-test-auth@kubernetescrd

spec:

rules:

- host: traefik.myapp.127.0.0.1.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: traefik-dashboard

port:

number: 80

The above configuration deployed a secured dashboard that is accessible to authorized users. You can now stop the port-forwarding which was done initially, and start using the secured dashboard.

In this article, I explained the essential concepts of dealing with Traefik Proxy 2.x in Kubernetes clusters. I hope this tutorial helps you employ the Traefik Kubernetes Ingress integration.

Thanks for reading!