Traefik and Docker: A Discussion with Docker Captain, Bret Fisher

Bret Fisher is the creator of “Docker Mastery” and part of the Docker Captain program.

During the past summer, we had an interesting discussion about Traefik and Docker with Bret Fisher, the creator of “Docker Mastery” and part of the Docker Captain program.

We’d like to share our discussion with you. Read on to learn more about Bret, and his experience using Traefik and Docker.

Containous: Hi Bret, could you introduce yourself to our community?

Bret: Hi, I’m Bret Fisher, a freelance DevOps and Docker consultant, focused on containers. I’m also a Docker Captain. My time is split between teaching, helping students, answering questions.

I’m driven by having fun while helping people.

Containous: Could you explain what is a “Docker Captain”?

Bret: The “Docker Captain” program is from Docker Inc.. A Docker Captain is like a Microsoft MVP. You cannot work for Docker Inc., should be an expert on docker tools and share your love for containers often. It also gives you access to pre-release Docker software.

Containous: How did you get into Traefik?

Bret: Because of Docker Swarm mode. Even though Kubernetes has become the most popular orchestrator, Docker Swarm still solves a lot of problems for many team. I was searching for an easy “Ingress” for Docker Swarm and Traefik was recommended by a fellow Docker Captain.

I liked Traefik because of the Let’s Encrypt support out-of-the box and because “It just works”.

Containous: Where do you use Traefik?

Bret: I’m using it on my own website at https://www.bretfisher.com/. This website runs on Docker Swarm with Traefik. I also recommended Traefik to my customers, and in my talks and workshops. A lot of my 120,000 students have ended up using Traefik.

Containous: Let’s talk a bit about container security. Are you running Traefik as the user “root”?

Bret: Is it the default ? (Laugh). I’m using the official Docker image so I guess “yes,” if it is the default user in that container Image.

Containous: Do you see any compensation measure for this?

Bret: First of all, I have a list of general container security activities that I follow and recommend others check out. It follows an order of “easiest steps with biggest benefits first” so you don’t spend time on hard security changes that have little overall improvement in your security posture. In addition, consider running Traefik’s container in “read-only” mode. This mode forbids any writing on the filesystem (unless you explicitly define a volume on an explicit path.). This first step avoids some (but not all!) common tasks as installing packages or downloading scripts in case of a bad actor gaining file system control in the container. Also, you could enable the feature “user namespaces” in the Docker Engine config, to map the root user of containers to an unprivileged user of the host machines, which I’m a big fan of.

Running a Traefik container as a non privileged user to avoid being “root” might create two challenges:

- Listening on ports below 1024: the range of ports [0–1024] are privileged and thus require special capabilities. Either you can bind to port > 1024, or ensure you are able to grant the Linux Capability

CAP_NET_BIND_SERVICEto Traefik. - Docker socket membership: the socket file in /var/run/docker.sock is owned by the root user, and a group named docker. The unprivileged user of Traefik must be part of the group docker to allow access to the Docker API.

Containous: Speaking about the Docker socket, do you run Traefik on Swarm Manager Nodes?

Bret: It’s not mandatory. The point is — you can always forward the Docker socket through TCP instead of using a bind-mount of /var/run/docker.sock. If you forward the socket in TCP inside and encrypted Docker network, then it removes the “run on manager” constraint for Traefik.

Please note that overlay networks optional encryption is not performed by Docker Swarm itself, but rather the Linux kernel (IPSec), ensuring low-level security.

Also, it’s worthwhile to add an intermediate proxy to control requests through the TCP-forwarded Docker socket, allowing read-only requests to the API.

I have an example Swarm stack YAML of this setup here.

With all of these, running Traefik on the host network of worker nodes, with an overlay network for backends is totally doable.

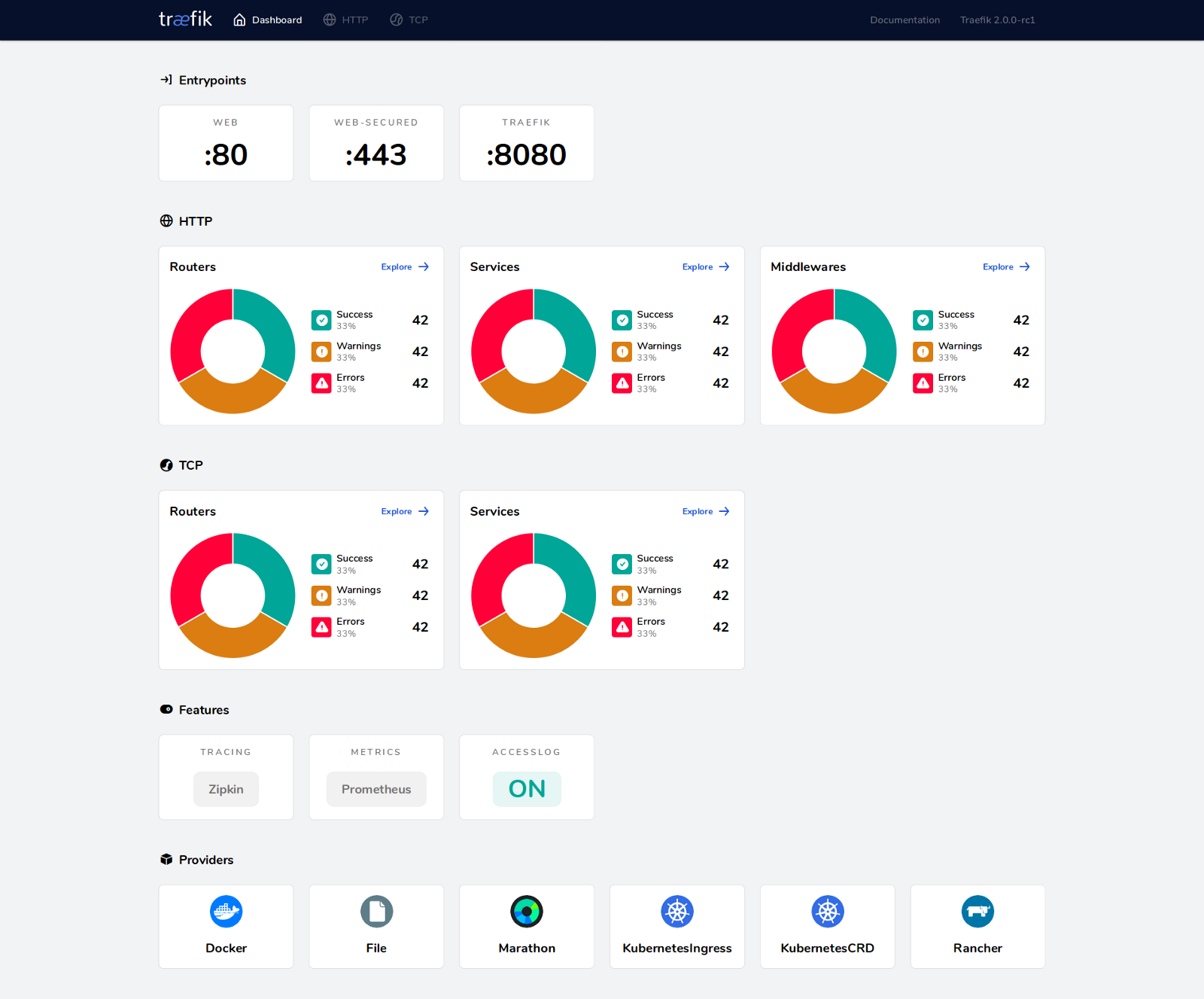

Containous: Are you using the Traefik dashboard? If yes, what is your point of view on the security of this feature?

Bret: Yes. I’m using the Traefik dashboard. I tend to configure the dashboard with an IP whitelist, and not exposed on a public network or on the default port.

Publicly exposing an admin dashboard keeps happening: just look at situations such as the Kubernetes dashboard hack at Tesla.

I’m a huge fan of “secure by default” tools, and I wished more tools were like Docker and Swarm in this way. Over my 25 years in tech helping many companies with infrastructure, I’ve learned that if authentication and encryption are optional, many won’t do it.

Containous: Do you have a recommendation about using the “default” Docker image for Traefik based on “scratch” versus the “Linux Alpine” image? Why?

Bret: As a rule of thumb, I would avoid “scratch” (or distroless) images until everyone in the pipeline is able to know how to operate it. Size is not important unless you’re operating an IoT business. Compared to the pain and suffering of “no tools,” using “scratch” doesn’t feel a good trade-off unless you have specialists on board. The image “scratch” feels like a “Golang developers” habit, but when it comes to the Ops team it’s another topic: What about not being able to docker exec or kubectl cp?

Hopefully, the Kubernetes ephemeral containers feature will help make this possible for more people.

As a rule of thumb, for container newcomers, they need to learn by doing. In this case, I wouldn’t use “scratch” here to keep the learning curve smooth.

I also share this opinion about the host OS. Too many people try to use a “Container OS” too early in their organisational learning. It tends to add lots of risk without a big productivity boost. Stick with your traditional Linux distribution until you’ve had significant production Docker usage.

Containous: What is your recommendation for the load balancer part outside Swarm? To create an external load balancer pointing to nodes, using DNS, or something else? And, why?

Bret: The expected answer is “It’s complicated” or ”It depends” (laugh).

Most companies already have their own solution for load balancing, including cloud load balancers and datacenter hardware load balancers. Then they point their load balancer to the Docker Swarm nodes and rely on Traefik for providing the application layer load balancing inside the cluster. Pro Tip: Don’t point LB’s to all your Swarm nodes. Pick 2–3 worker nodes and make those the ingress points, which will make troubleshooting and load easier to manage.

However, about the “DNS round robin” solution, I refer to it as the “poor person’s LB”, because of the small amount of time required to make it work. It’s simple when the client is a web browser, but as you cannot control the “client”, that’s where the limit is drawn (DNS caching, no retry patterns, etc.). Another limit: as soon as showing HTTP 503 errors is an issue, there is no “retry pattern” expected from web browsers (even though Traefik could do that). It’s the recommended solution in my course “Solo DevOps” where the context is “only one person in charge of the DevOps tasks, with so many tools involved”. Once you have an external load balancer solution, it’s likely best to stick with that in all but the smallest projects.

As a general rule, ask yourselves: “During the time it goes down, do you lose money? If yes, then go for load balancing with health checks.”

Try both solutions for education purposes. It’s really valuable.

Containous: Have you tried the new Traefik v2.0?

Bret: Not yet, but it made quite some noise in the Docker Captain channel. It might be the fact that they are “Golang people,” but it looks neat.

Containous: Is there something you would like to tell the Traefik community?

Bret: Traefik is a really nice tool because everything is configurable from the command line when starting in a container (using CMD). TOML files are fine, but it’s great to have the option to skip that and configure it dynamically at runtime.

Also, everything is streamlined for the container world, with a linear learning curve: configuration management is not mandatory even for rich features such as Let’s Encrypt.

Containous: Thanks a lot for these insights Bret! Happy to have this exchange. Let’s meet again for a live session next time!