Building Your Networking Strategy Using Multi-Cloud, Hybrid Cloud or Multi-Orchestrator Architectures

As businesses and their applications grow, so do the demands placed on their networks. Networking strategies are required to manage so many services spread across such a broad surface. But with so many kinds of networks out there, it isn’t always obvious which kind your organization is managing.

The terms hybrid cloud, multi-cloud, and multi-orchestrator are often used interchangeably, but they are three distinctly different architectures used in networking strategies, each with its own advantages and disadvantages. In this article, we will explore each in detail and highlight the differences between their basic and unified forms.

Hybrid cloud architectures

Hybrid cloud architectures run workloads in both data centers controlled by the organization as well as in public or private cloud infrastructures. There are many reasons a company would pursue this architecture.

A hybrid cloud setup may be a temporary phase of a wider cloud migration project. For example, a company may run legacy services in VMs or bare servers in an internal data center while also introducing microservices to a container orchestrator in a public cloud provider. A hybrid cloud setup may also be required in industries, such as healthcare and financial services, that handle sensitive information and are highly regulated. Sensitive information can be stored in internal data centers, while less sensitive information in the cloud.

Let’s examine what basic and unified hybrid cloud architectures look like.

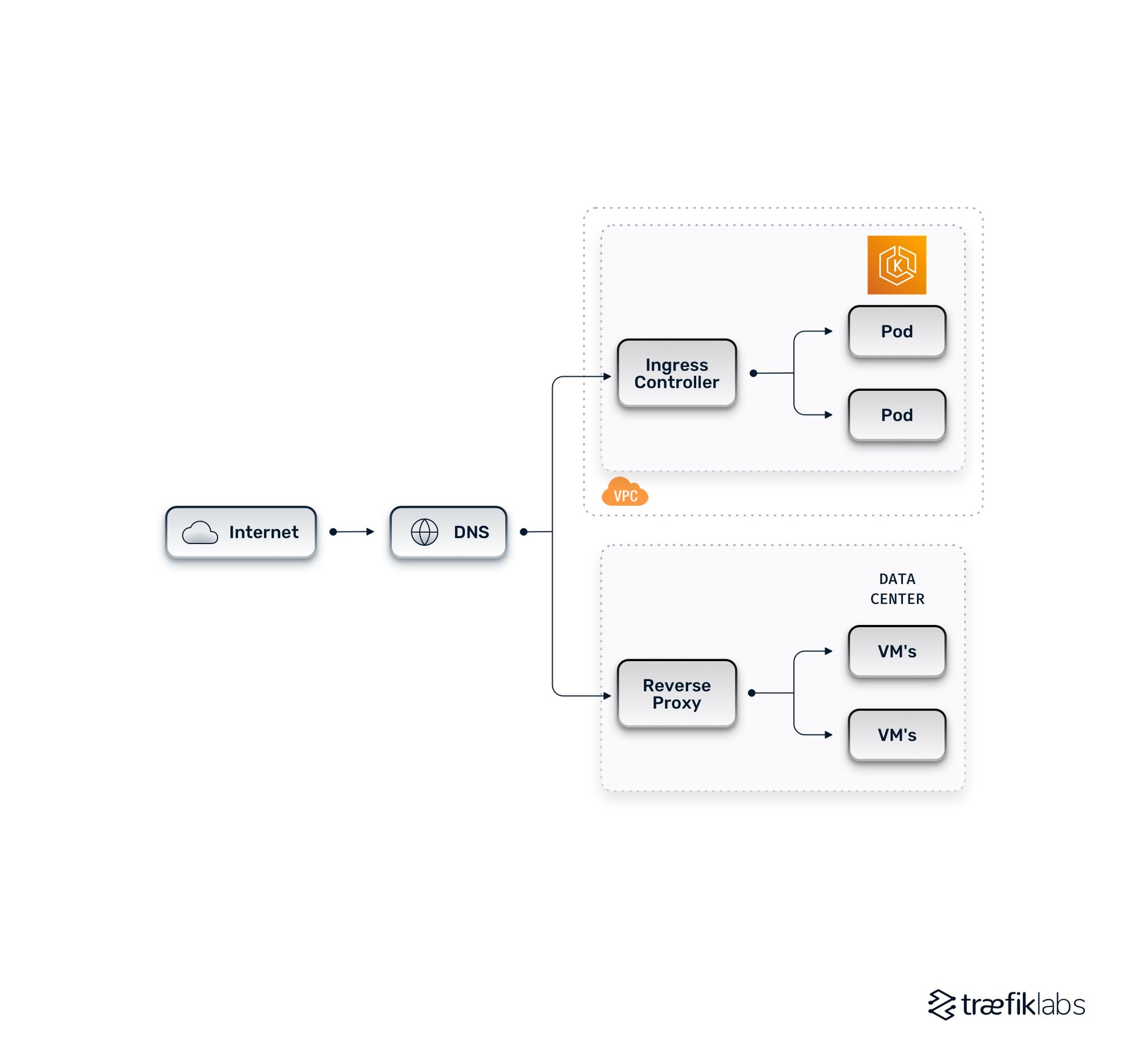

What is a basic hybrid cloud architecture?

Hybrid cloud architectures combine public and private clouds. An example of an architecture might have some legacy services running in VMs in a data center with a reverse proxy routing traffic to individual services and also have a Kubernetes cluster running applications within pods in the cluster. An ingress controller handles a similar role to the reverse proxy on-prem, handling incoming requests and routing them to the correct pod in the backend. DNS records point traffic to the cloud and data center. This architecture is usually the first step in a cloud migration process.

Thee are several challenges to be aware of when adopting this architecture, such as how to handle the initial routing by environment. The DNS will route traffic based on the environment within which an application is living. The routing infrastructure is redundant, as the ingress controller and reverse proxy handle similar functions but in different environments.

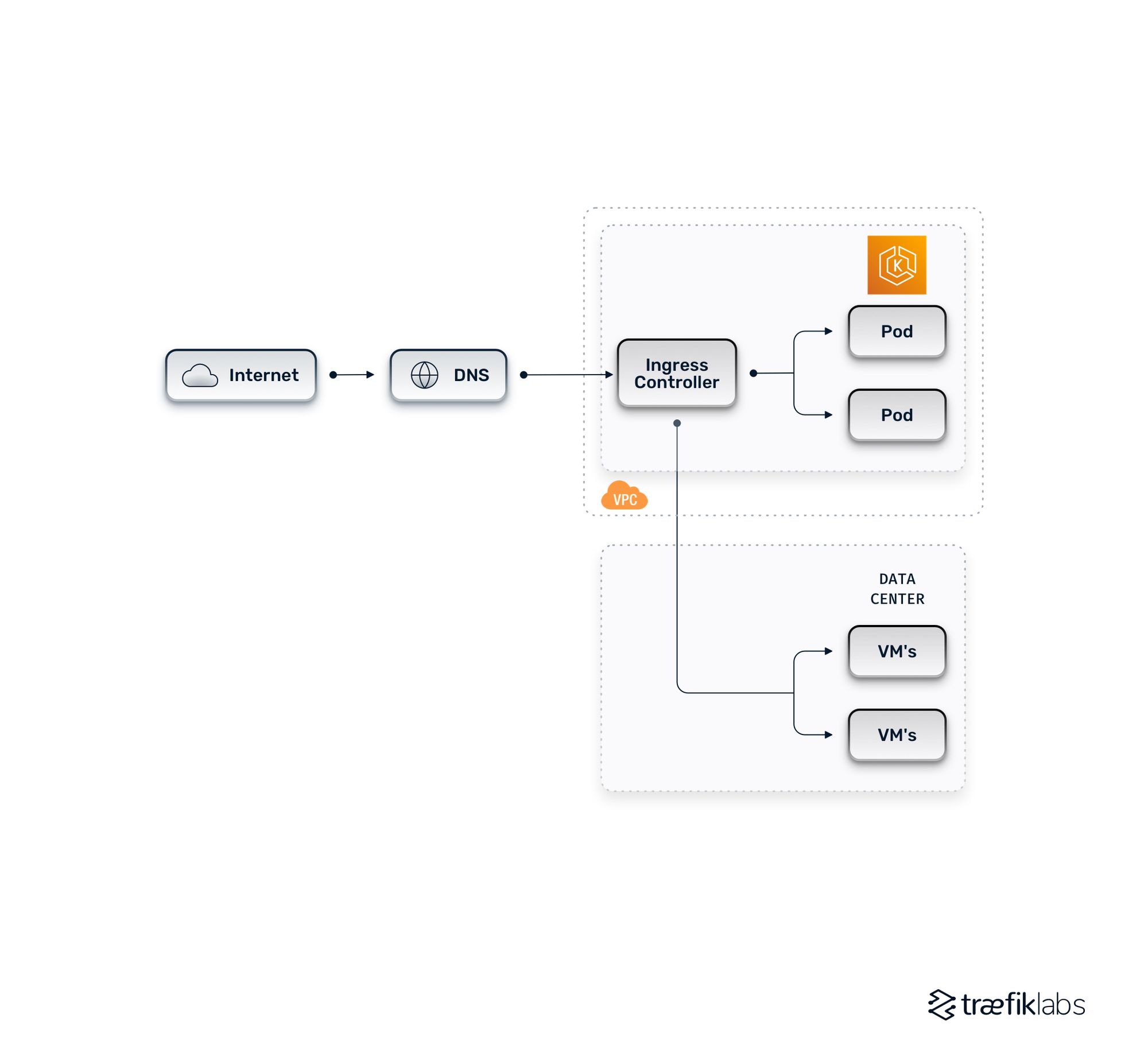

What is a unified hybrid cloud architecture?

A unified hybrid cloud architecture is a variation of a basic hybrid cloud architecture that consolidates the routing approach. A centralized ingress controller handles everything, routing traffic from the internet to internal pods within a cluster and also to legacy services within a data center. This approach is simpler to manage, as there are fewer routing tools and infrastructure components to manage. This reduces maintenance and room for error.

Pick a unified ingress tool that is compatible with different workload types. It should be able to handle Kubernetes service discovery as well as the routing of traffic to static IPs or host names in VMs in the data center. While less maintenance is required, more planning is involved.

Ask yourself a few key questions when adopting a unified ingress tool. Is your application particularly sensitive to latency? Do you need to think about networking and firewall rules, so ports and protocols open effectively to communicate across different environments? Is everything that shouldn’t be open for communication secure? Your chosen tool should effectively and securely handle separate workload types across multiple environments.

Multi-cloud architectures

Rather than running a set of workloads in a single cloud provider, companies leveraging multi-cloud architectures use several. They might have one Kubernetes deployment running in GKE and GCP, some containers running as ECS services in AWS, and maybe a few VMs running in Azure. Obviously, these workload types are interchangeable across different cloud providers. Hyperscale cloud providers have long competed with each other for market share, and multi-cloud architectures allow companies to take advantage of several.

There are many benefits to this approach. Multi-cloud architectures increase resilience and fault tolerance. While you hopefully don’t need to plan for a major public cloud outage, it’s still a good idea to have a risk mitigation strategy that spreads your deployments across vendors. A multi-cloud architecture could be part of an overall strategy for cost optimization, as different cloud providers might have comparable services at varying prices. You may also want to take advantage of unique services offered by different providers. Multi-cloud architectures also help you avoid the vendor lock-in that comes from putting all your eggs in one basket (just be careful of avoiding multi-cloud lock-in).

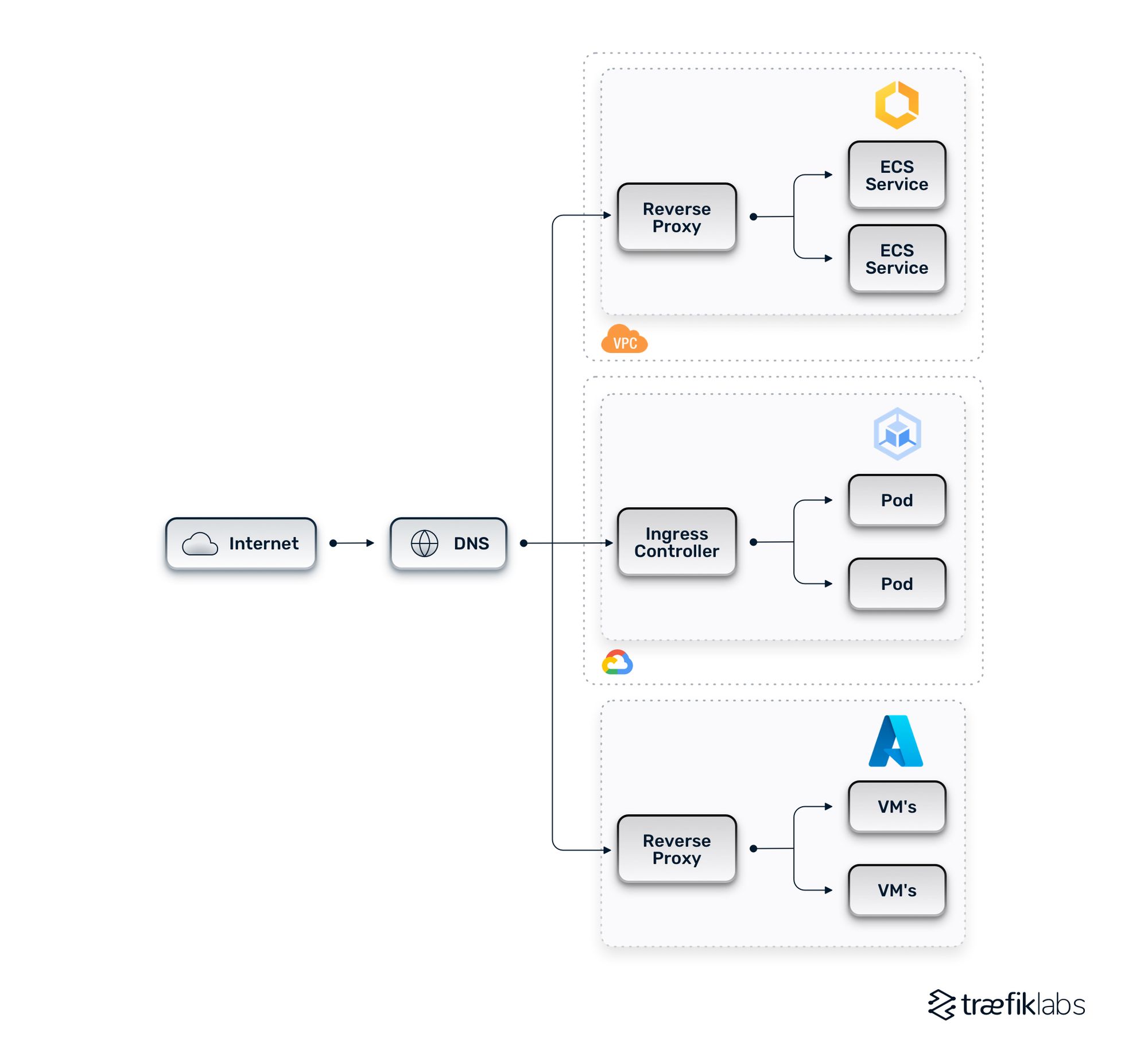

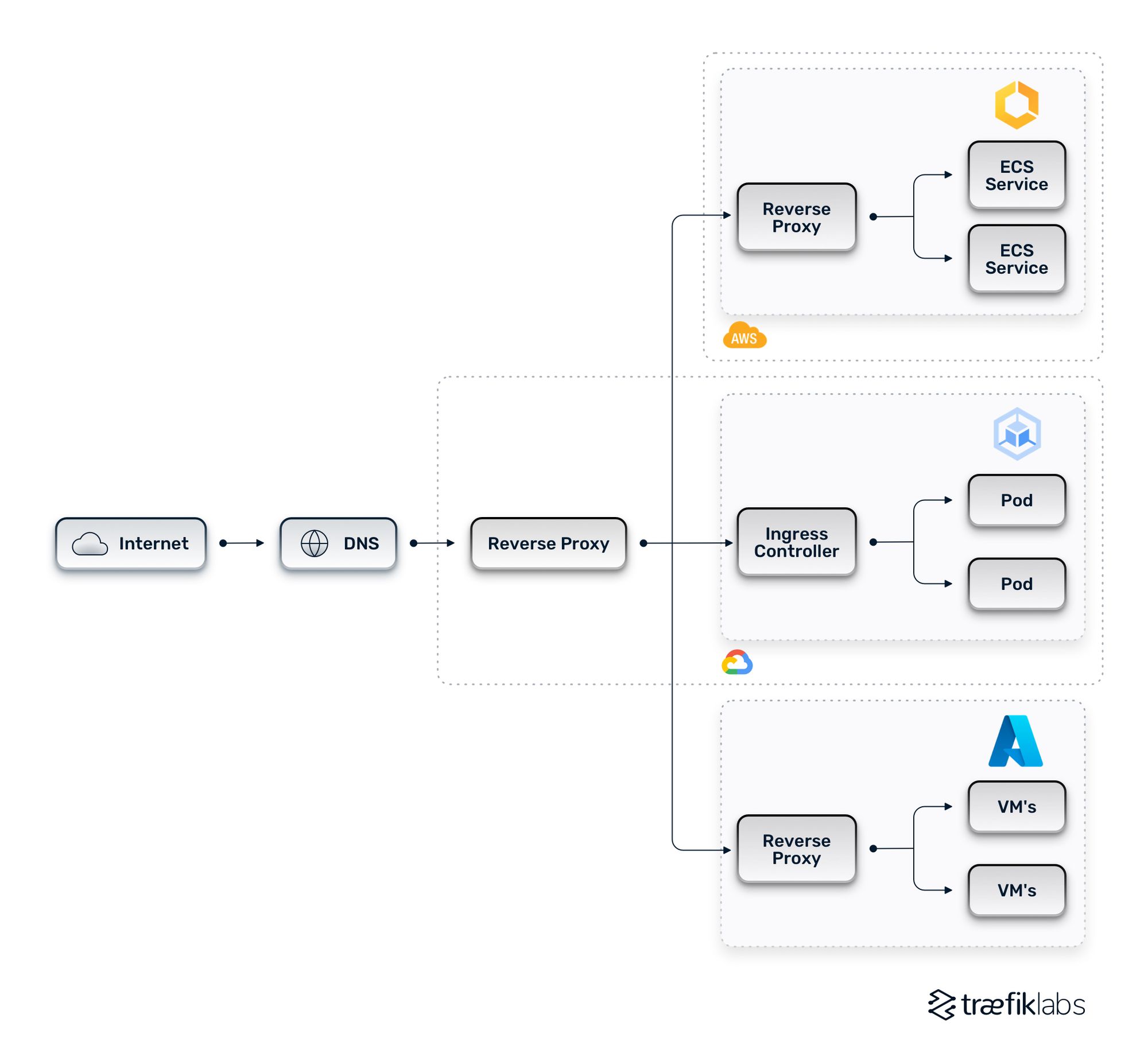

What is a basic multi-cloud architecture?

Let’s walk through an example of a standard multi-cloud architecture, which takes a DNS-based approach.

In the diagram below, a few ECS services live in an ECS cluster, a few Kubernetes pods in a GKE cluster, and a few VMs in Azure. In front of each set of workloads is a reverse proxy or ingress controller. The two terms can be used interchangeably here as they both transmit incoming requests to the correct back-end service. The biggest challenge to overcome with this approach is that it is prescriptive, meaning the routing options are somewhat inflexible.

What is a unified multi-cloud architecture?

A more evolved multi-cloud architecture adds a reverse proxy in the front that can be thought of as an edge reverse proxy or a layer one reverse proxy. The reverse proxy handles the initial routing to individual clusters and unifies routing.

A unified multi-cloud architecture allows for far more sophisticated load balancing schemes. For example, you can expose and route unified API host names across multiple clouds by leveraging path-based routing. You can abstract the location of the actual services away from client requests.

Unified multi-cloud architectures can increase latency, as they add an additional stop to the routing path. They also require manual configuration to keep the list of locations in the first layer up to date — unless you have a tool that supports the dynamic syncing of service lists. Either way, make sure you get up to speed on best practices for networking and security.

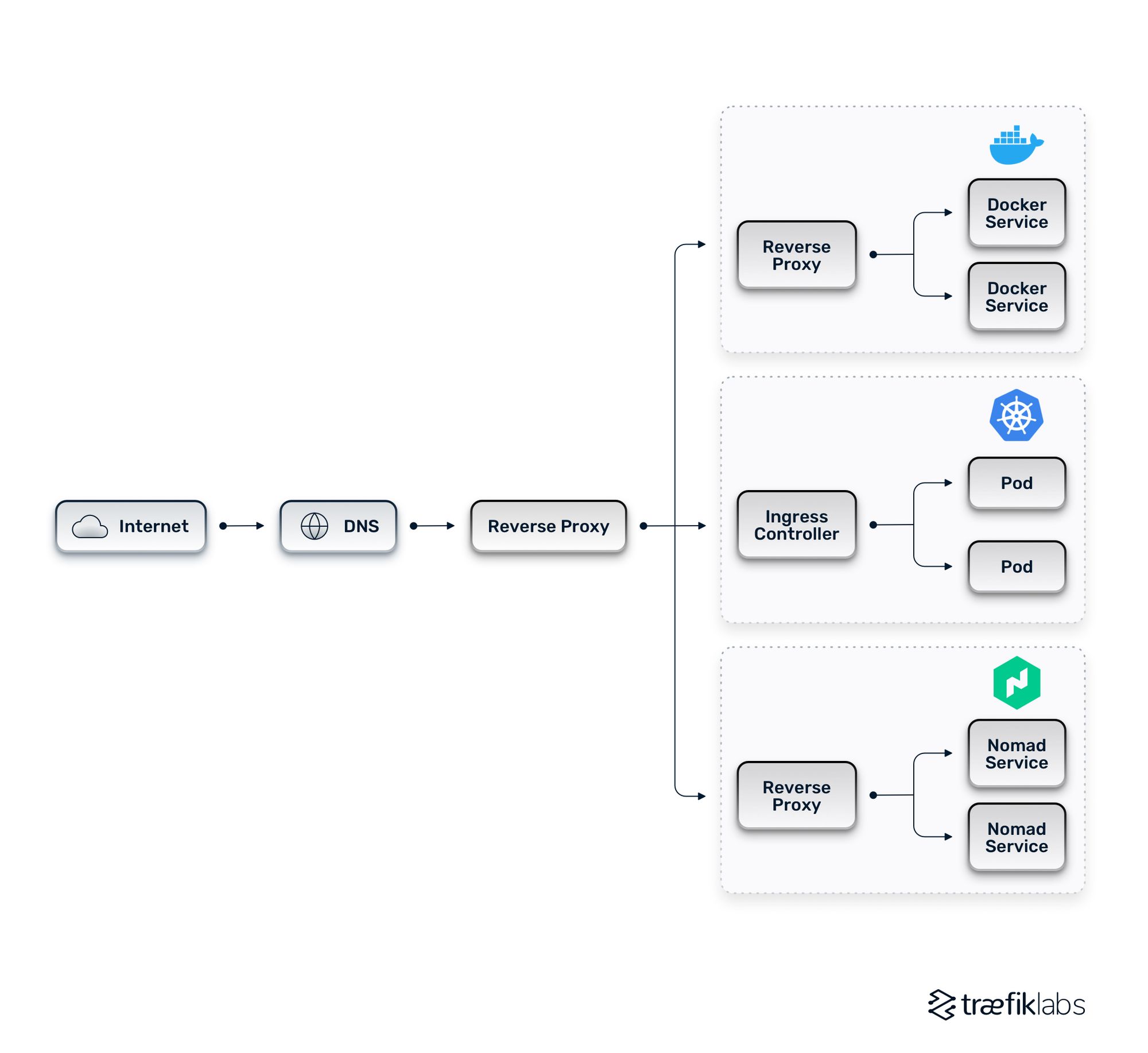

Multi-orchestrator architectures

Multi-orchestrator architectures consist of applications running as containers within more than one container orchestrator. This could take the form of different orchestrators running within the same cloud or environment, and it could also overlap with a hybrid or multi-cloud architecture.

For example, you could have a greenfield application running in a cloud-based Kubernetes cluster as well as existing services in Docker Swarm. A multi-orchestrator architecture could be temporary if you’re migrating between orchestrators. You might be consolidating on Kubernetes from Docker Swarm. This architecture might be less temporary if you have some applications with specific requirements that prevent full standardization on a particular orchestrator type.

Multi-orchestrator architectures must be unified, as it’s very difficult to network traffic without a consolidated solution. In a unified multi-orchestrator architecture, a reverse proxy sits in layer one to distribute traffic to reverse proxies and ingress controllers associated with different orchestrators. In the diagram below, we have Docker, Kubernetes, and Nomad.

The benefits and challenges of multi-orchestrator architectures are similar to those found in a multi-cloud setup. This approach allows for flexibility. Networking updates are more readily changeable. If you choose to adopt a multi-orchestrator architecture, it’s important to select tools that can handle service discovery across different orchestrators, and that can hook into various orchestrator APIs. Automation is important too, as you will have to think about manual configurations if you don’t have a tool that automates the process for you. It will allow you to centrally configure security across all clusters.

How to evolve your advanced networking architecture with a multi-layered setup

Organizations managing advanced architectures will gain much from unifying their network. In hybrid cloud, multi-cloud, or multi-orchestrator architectures, a consolidated solution will increase efficiency and enable automation at scale. By adding a second reverse proxy or ingress controller to a second layer in front of your applications, you can unify your architecture.

Traefik Enterprise is a unified cloud native networking solution that brings ingress control, API management, and service mesh together in one simple control plane. It eases microservices networking complexity for developers and operators across an organization. The Traefik Provider allows organizations to build and operate a multi-layer architecture, and many companies have found success in doing so. AmeriSave uses Traefik Enterprise to build a two-layer architecture within which they can seamlessly transfer traffic between Kubernetes clusters in Microsoft Azure and Docker clusters in on-prem environments.

In many organizations that have reached a certain scale, more than one architecture will be in play. Some of the approaches we’ve looked at are a temporary product of a migration effort. Others are set up intentionally for the long term.

Think about your overall routing strategy when architecting. Make sure security is in place throughout. Learn whether it’s important to consolidate your networking solution with a multi-layered setup. Whether you embrace a hybrid-cloud, multi-cloud, and/or multi-orchestrator architecture, choose tools that are compatible with your setup and that simplify its management and configuration.