Beyond Kubernetes: Bringing Microservices Together with Service Mesh

When adopting the microservices application model, Kubernetes is a natural starting point. Extensible, open source, and with a thriving ecosystem, Kubernetes has emerged as the go-to orchestrator for containerized infrastructure. When used as the foundation for microservices, however, you may find that Kubernetes alone isn’t enough to handle the more complex networking challenges that arise. This is the job of a service mesh.

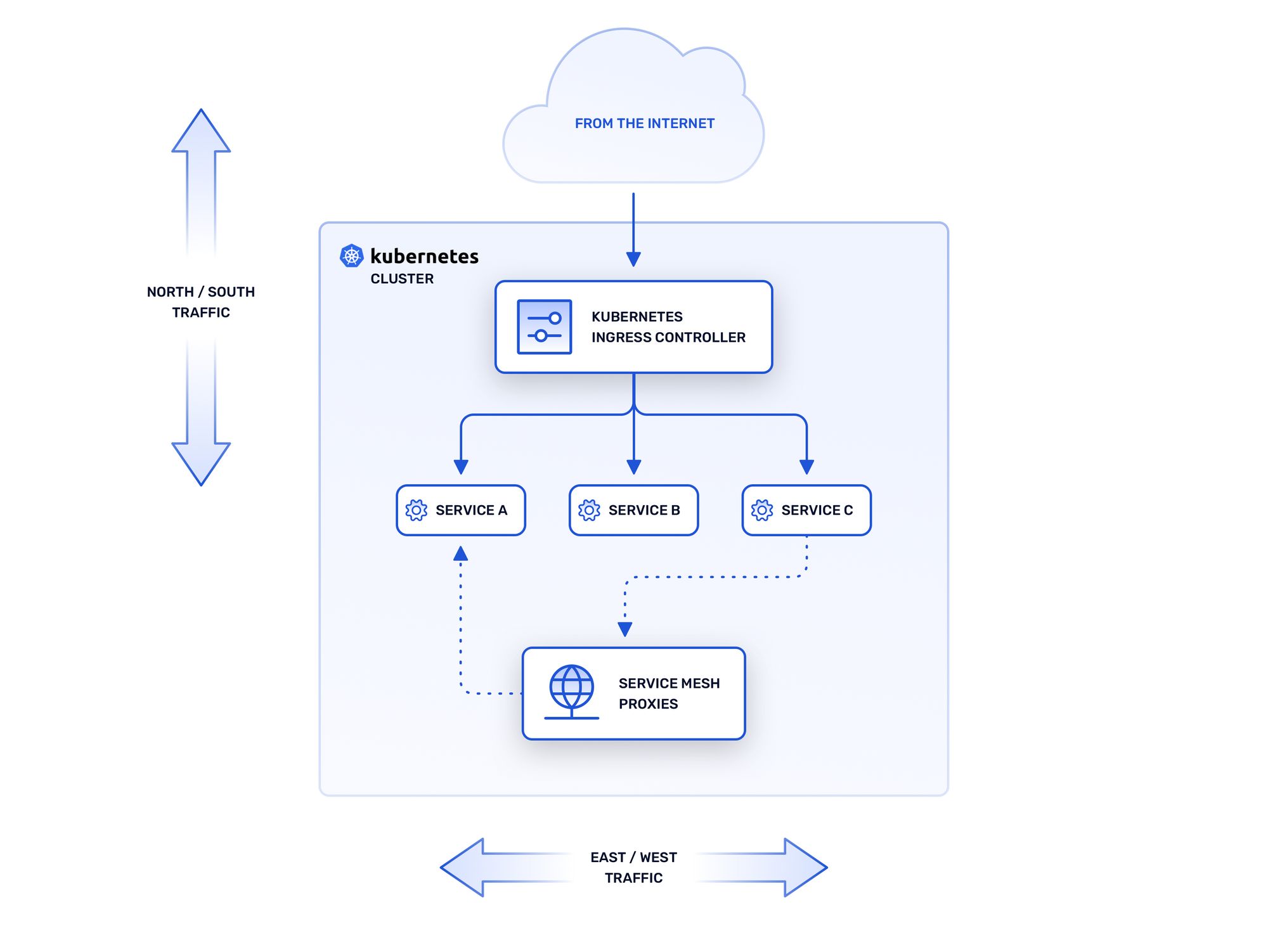

One of the most important aspects to consider about the microservices model is its heavy dependence on networking. Unfortunately, a network is seldom as reliable or resilient as its hypothetical diagram suggests. Services fail, network routes change or disappear, and unexpected traffic can disrupt normal usage patterns. This is even more true for containerized microservices, which by their nature tend to be stateless, ephemeral, and disposable. Maintaining the performance and stability of such an environment is anything but simple. Kubernetes addresses the first part of this challenge by automating the lifecycles of containers and their associated applications. When coupled with an Ingress controller, such as Contour or Traefik, Kubernetes also manages communications from the external network to workloads running in the cluster and vice versa (sometimes called north-south traffic).

Of equal importance in a microservices environment, however, are communications between services within the cluster (known as east-west traffic). While basic networking within the cluster is handled by Kubernetes itself, a service mesh is a dedicated infrastructure layer that handles many of the routine networking tasks that are necessary for a loose collection of containerized services to work together as a cohesive application.

The Role of Service Mesh

An important property of a service mesh is that it decouples east-west networking functions from application logic. As the environment scales and new services are added to the mix, they should be able to expect the same level of management as their peers, without code changes or refactoring. Examples of functions of a service mesh include:

Traffic control. Communications between the cluster and the external network require routing and management, and so do communications between services on the cluster. Tasks such as advanced load balancing and rate limiting of service-to-service traffic are primary functions of the service mesh, which helps to prevent and contain disruptions and performance degradations that misconfigured or misbehaving services can cause.

Security. Even when ingress controls are in place to protect the cluster from external networking threats, attackers may still attempt to exploit trusted relationships between services on the cluster. A service mesh can help here by providing seamless authentication, access control, and management of encrypted links between services, among other security features.

Observability. Another important factor is the ability of a service mesh to provide consistent logging, metrics, and visibility into the inner workings of a cluster. The insights gained from these functions are invaluable for maintaining the health and proper operation of microservices-based applications.

Options and Standards

Over the years, various forms of application middleware have implemented east-west networking functions in various ways. It is only since the rise of containerized infrastructure, and Kubernetes in particular, that the concept of a service mesh as a dedicated layer has truly crystallized. Even so, opinions on how a mesh should be implemented still differ.

Standardization offers some hope for clarity. Recently, a consortium of cloud-native software vendors has begun collaborating on the Service Mesh Interface (SMI), an evolving effort that is shepherded by the Cloud Native Computing Foundation (CNCF). The SMI specification defines APIs for many of the networking functions described earlier. Still, these APIs can be implemented in multiple ways.

The sidecar pattern is one way to design a service mesh for Kubernetes. In this model, a so-called sidecar container is deployed alongside each instance of a service within a pod to handle east-west traffic for that instance. This is a popular pattern for implementing a service mesh, and is the method adopted by the likes of Istio and Linkerd (the latter a CNCF incubating project).

An alternative method is to deploy a service mesh proxy endpoint that runs as its own pod on each node of a cluster, using the Kubernetes concept of a DaemonSet. This method has the advantage of being less invasive, in that the mesh proxy does not need to modify any Kubernetes objects and no network traffic is modified without a service owner’s consent. This is the model adopted by Maesh.

How to Move Forward

So, while standardization helps make it easier to know what to expect from a service mesh, important decisions remain before adopting a specific solution. Even choosing how to begin deploying a mesh can be challenging, owing to the potential for disruption of existing communication patterns within a cluster.

These decisions are made significantly easier when dealing with so-called greenfield projects, where the clustered, microservices-based application is built from the ground up, without dependencies on legacy infrastructure. This is a happy situation to have, but it’s not always realistic, especially in environments where adoption of containerization is already mature.

If it will be necessary to run some services on a service mesh alongside other services for which it is preferable to have them manage their own east-west traffic, it may be worth looking for a loosely coupled solution, such as Maesh. This has the advantage of allowing the teams that own individual services to opt into the mesh when they are ready, rather than forcing a mass migration with interdependencies that may be difficult to test.

However you choose to proceed, the key takeaway should be that service mesh for Kubernetes, while still an emerging technology, can often provide the “missing piece” that Kubernetes alone does not. By abstracting key east-west networking features away from application logic, mesh solutions enable distributed, microservices-based applications to operate in a way that is managed, observable, and secure, both when communicating with the outside world and within the cluster itself.

To learn more, check out this video about the advantages and disadvantages of a service mesh, and the appropriate situations for using one.